When you type an address into your web browser and are brought to a web server, a lot of decentralized magic happens within the span of a few seconds. Through the web, we have an infinite media available to us.

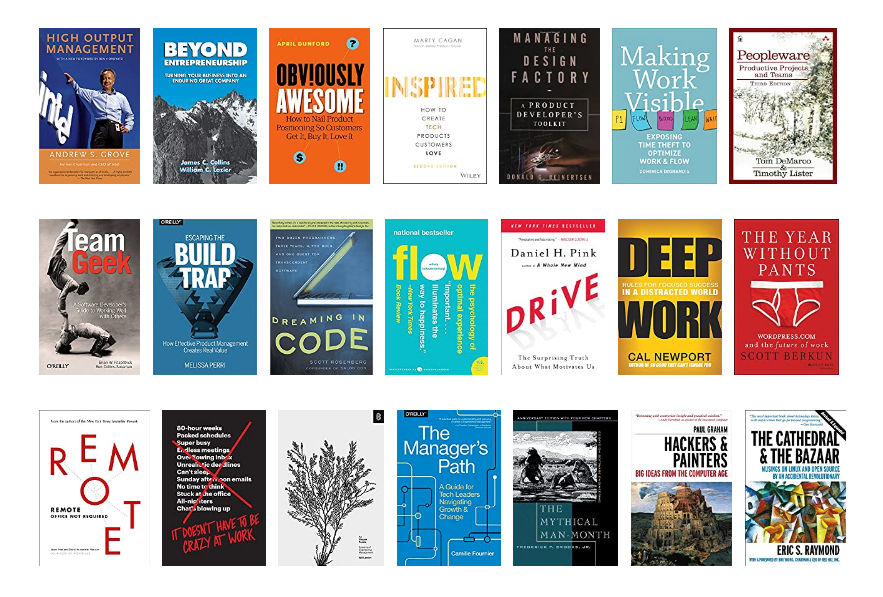

It as though you have a beautifully-maintained bookshelf and run your finger along the spines of the books, and then pluck out the one you want. But the sci-fi part, which is more science than fiction today, is that the bookshelf has millions of virtual entries and the information you want is delivered to you instantaneously. Once this virtual book is delivered (once a website is loaded), it can be frequently refreshed with real-time updates, and it exists in a form that can be navigated, searched, read, spoken, heard, shared, saved-for-later, or even automatically analyzed and summarized.

This is a lot of power for each individual to wield.

That is a lot of text to choose from, with which you can train your brain.

And that is even if you put aside the world of paid digital books via Amazon’s empire of Kindle. By the way, this Amazon empire need not cost money to you in the US, as you can often gain (adequate) free access to it via your local library on the Libby app.

So, one thing is for sure: there are a lot of words to choose from when deciding what to read. But this also means that an individual faces a paradox of choice when they click into that blank address bar in their browser.

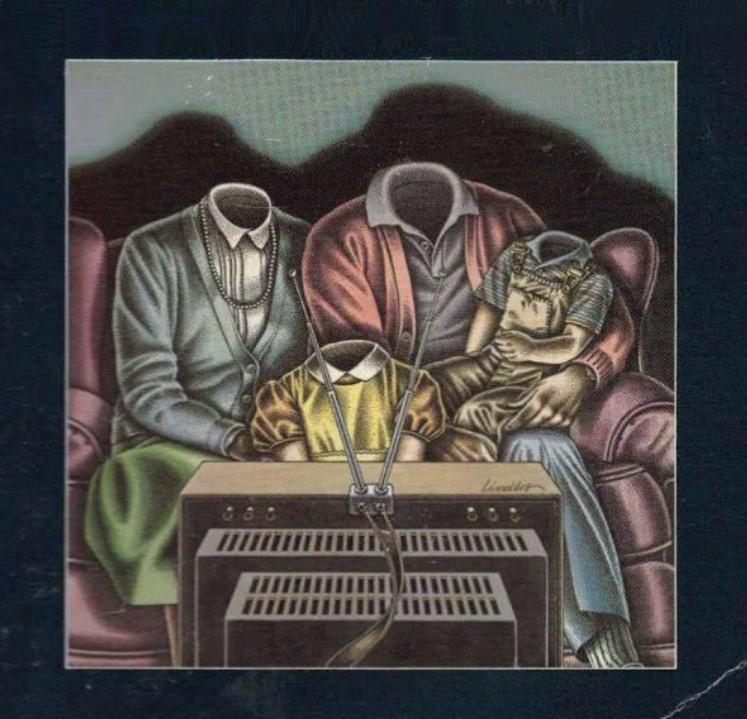

Will they, like so many others, ignore the address bar and the browser altogether? That is, despite having the “infinite bookshelf” at their fingertips, will they, instead, hit an app shortcut to one of the major passive content delivery platforms, like Facebook, YouTube, Instagram, or TikTok?

Recent research from Pew suggests that major passive-consumption mobile apps are used by a majority of Americans, and, what’s more, that usage of the most video-forward of these (YouTube, Instagram, and TikTok) is nearly universal among people 18-29 years old. As for teens, 9 out of 10 of them are online (presumably via smartphones) every single day, and nearly 5 out of 10 are online “almost constantly.” This comes from a 2023 report.

If you read between the lines of these two reports, what comes into a focus is a culture of individuals addicted to video streaming devices in their pocket, filling inevitable moments of boredom with hastily- and cheaply-produced sights and sounds, rather than retreating to the world of written words. And, unsurprisingly, people are reading less.

Continue reading Putting Your Media on a Diet