After initial product-market fit and during a period of rapid customer adoption, the Parse.ly team embarked upon the task of re-envisioning its entire backend technology stack. The goal was to build upon the learnings of more than 2 years delivering real-time web content analytics, and use that knowledge to create the foundation for a scalable stream processing system that had built-in support for fault tolerance, data consistency, and query flexibility.

Parse.ly continued to run this new system successfully in production for many years after it shipped. Here’s what we learned about designing, building, shipping, and scaling the mythical “second system”.

The Second System Effect

But why re-design our existing system? This question lingered in our minds a few years back. After all, the first system was successful. And I had the lessons of Frederick Brooks accessible and nearby when I embarked on this project. He wrote in The Mythical Man-Month:

Sooner or later the first system is finished, and the architect, with firm confidence and a demonstrated mastery of that class of systems, is ready to build a second system.

This second is the most dangerous system a man ever designs.

When he does his third and later ones, his prior experiences will confirm each other as to the general characteristics of such systems, and their differences will identify those parts of his experience that are particular and not generalizable.

The general tendency is to over-design the second system, using all the ideas and frills that were cautiously sidetracked on the first one. The result, as Ovid says, is a “big pile.”

Were we suffering from engineering hubris to redesign a working system? Perhaps. But we may have been suffering from something else altogether healthy — the paranoia of a high-growth software startup.

Our product had only just been commercialized. We were a team small enough to be nimble, but large enough to be dangerous. Yes, there were only a handful of engineers. But we were operating at the scale of billions of analytics events per day, on-track to serve hundreds of enterprise customers who required low-latency analytics over terabytes of production data. We knew that scale was not just a “temporary problem”. It was going to be the problem. It was going to be relentless.

Innovation Leaps

Innovation doesn’t come incrementally; it comes in waves. And in this industry, yesterday’s “feat of engineering brilliance” is today’s “commodity functionality”.

This is we found ourselves: We had a successful system with a lot of demands from customers, but no way to satisfy those demands, because the system had been designed with a heap of trade-offs that reflected “life on the edge,” working with large-scale analytics data.

Back then, we were eager adopters of tools like Hadoop Streaming, Apache Pig, ZeroMQ, and Redis, to handle both low-latency and large-batch analytics. We had already migrated away from these tools, refreshing our stack as the open source community shipped new usable tools.

Meanwhile, expectations had changed. Real-time was no longer innovative; it was expected. A cornucopia of nuanced metrics were no longer nice-to-haves; they were necessities for our customers.

It was no longer enough for our data to be “directionally correct” (our main goal during the “initial product/market fit” phase), but instead it needed to be enterprise-ready: verifiable, auditable, resilient, always-on. The web was changing, too. Assumptions we made about the kind of content our system was measuring shifted before our very eyes. Edge cases were becoming common cases. The exception was becoming the rule. Our data processing scale was already on track to 10x. We could see it going 100x. (It actually went 1000x.)

Once a new technology rolls over you, if you’re not part of the steamroller, you’re part of the road.

–Stewart Brand

So yes, we could have attempted an incremental improvement of the status quo. But doing so would have only resulted in an incremental step, not a giant leap. And incremental steps wouldn’t cut it.

Joel Spolsky famously said that rewriting a working system is something “you should never do”.

There’s a subtle reason that programmers always want to throw away the code and start over. The reason is that they think the old code is a mess. And here is the interesting observation: they are probably wrong.

The reason that they think the old code is a mess is because of a cardinal, fundamental law of programming: It’s harder to read code than to write it.

But what about Apple II vs Macintosh? Did Steve Jobs and his pirate team make a mistake in deciding to rethink everything from scratch — from the hardware to operating system to programming model — for their new product? Or was rethinking everything the price of admission for a high-growth tech company to continue to innovate in its space?

Big Rewrites are New Products

Perhaps we really have a problem of term definition.

When we think “rewrite”, we think “refactor”, as in change the codebase for a “zen garden” requirement, like code cleanliness or performance.

In software, we should admit that “big rewrites” aren’t about refactoring existing products — they are about building and shipping brand new products upon existing knowledge.

Perhaps what your existing product tells you is a core set of use cases which the new product must satisfy. But if your “rewrite” doesn’t support a whole set of new use cases, then it is probably doomed to be a waste of time.

If the Macintosh had shipped and was nothing more than a prettier, better-engineered Apple II, it would have been a failure. But the Macintosh represented a leap from command prompts to graphical user interfaces, and from keyboard-oriented control to the mouse. These two major innovations (among others) propelled not just the Apple’s products into the mainstream, but also generally fueled the personal computing revolution!

So, forget rewrites. Think, new product launches. We re-use code and know-how, yes, but also organizational experience.

We eliminate sunk cost thinking and charge ahead. We fly the pirate flag.

But, we still keep our wits about us.

If you are writing a new product as a “rewrite”, then you should expect it to require as much attention to detail as shipping the original product took, with the added downsides of legacy expectations (how the product used to work) and inflated future expectations (how big an impact the new product should have).

Toward the Second System

So, how did we march toward this new launch? How did we do a big rewrite in such a way that we lived to tell the tale?

System One Problems

In the case of Parse.ly, one thing we did right was to focus on the actual, not imagined or theoretical, problems. We identified a few major problems with the first version of our system, which was in production 2012-2015.

- Data consistency problems due to pre-aggregation. Issues with data consistency and reliability were our number one support burden, and investigating them was our number one engineering distraction.

- Did not track analytics at the visitor and URL level. As our customers moved their web content from the “standard article” model to a number of new content forms, such as interactive features, quizzes, embedded audio/video, cards/streams, landing pages, mobile apps, and the like, the unit of tracking we used in our product/market fit MVP (the “article” or “post”) started to feel increasingly limiting.

- Only supported a single metric, the page view, or click. Our customers wanted to understand alternative metrics, segments, and source attributions — and we only supported this single metric throughout our databases and user interface. This metric was essentially a core assumption built throughout the system. That we only supported this one metric at first might seem surprising — after all, page views are a basic metric! But, that was precisely the point. During our initial product/market fit stage, we were trying to drain as much risk from the problem. We were focusing on the core value, and market differentiation. We weren’t trying to prove the value of new metrics. We were trying to prove the value of a new category of analytics, which we called “content analytics” — the merger of content understanding (what the content is about) and content engagement (how many people visited the content and where they came from). From a technical standpoint, our MVP addressed both of these issues while only supporting a single metric, along with various filters and groupings thereof. Note: in retrospect, this was a brilliant bit of scope reduction in our early days. This MVP got us to our first million in annual revenue, and let us see all sorts of real-world data from real customers. The initial revenue gave us enough runway to survive to a Series A financing round, as well.

There were other purely technical problems with the existing system, too:

- Some components were single points of failure, such as our Redis real-time store.

- Our system did not work easily with multi-data-center setups, thus making failover and high availability harder to provide.

- Data was stored and queried in two places for real-time and historical queries (Redis vs MongoDB), making the client code that accessed the data complex.

- Rebuilding the data from raw logs was not possible for real-time data and was very complex for historical data.

- To support our popular API product, we had a completely separate codebase, which had to access and merge data from four different databases (Redis, MongoDB, Postgres, and Apache Solr).

System One Feats of Engineering

However, System One also did many things very well.

- Real-time and historical queries were satisfied with very good latencies; often milliseconds for real-time and below 1-2 seconds for historical data.

- Data collection was solid, tracking billions of page views per month and archiving them reliably in Amazon S3.

- API latencies were serving a billion API requests per month and were very stable.

- Despite not supporting multiple data centers, we did have a working high availability and failover story that had worked for us so far.

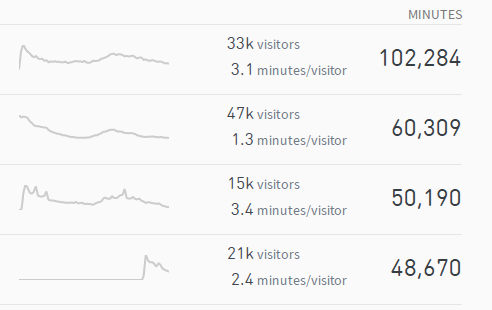

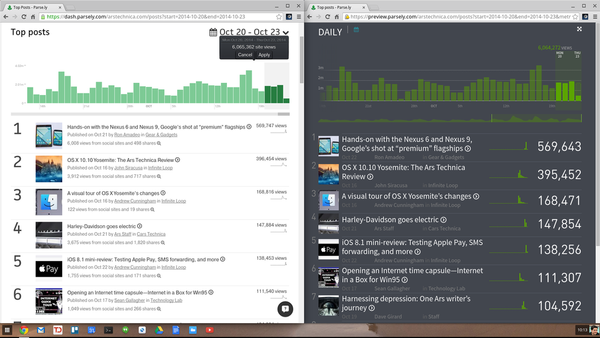

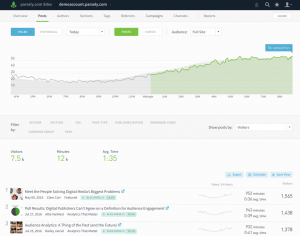

System One had also undergone its share of refactorings. It started its life as simple cronjobs, evolved into a Python and Celery/ZeroMQ system, and eventually into using Storm and Kafka. It had layered on “experimental support” for a couple of new metrics, namely visitors (via a clever implementation of HyperLogLog) and shares (via a clever share crawling subsystem). Both were proving to be popular data sources, though these metrics were only supported in a limited way, due to their experimental implementation. Throughout, System One’s data was being used to power rich dashboards with thousands of users per customer; CSV exports that drove decision-making; and APIs that powered sites the world over.

System Two Requirements

Based on all of this, we laid our requirements for System Two, both customer oriented and technical.

Customer requirements:

- URL is basic unit of tracking; every URL is tracked.

- Supports multiple sources, segments, and metrics.

- Still supports page views and referrers.

- Adds visitor-oriented understanding and automatic social share counting — unique visitor counts and social interaction counts across URLs, posts, and content categories. The need for this was proven by our experiments in System One.

- Real-time queries for live updates.

- 5-minute buckets for past 24 hours and week-ago benchmarking.

- 1-day buckets for past 2 years.

- Low latency queries, especially for real-time data.

- Verifiably correct data.

Technical requirements:

- Real-time processing can handle current firehose with ease — this started at 2k pixels per second, but 10x’ed to 20k pixels per second in 2016, and kept growing from there.

- Batch processing can do rebuilds of customer data and daily periods.

- The batch and real-time layers are simplified, with shared code among them in pure Python.

- Databases are linearly and horizontally scalable with more hardware.

- Data is persistent and highly available once stored.

- Query of real-time and historical data uses a unified time series engine.

- A common query language and client library is used across our dashboard and our API.

- Room in model for adding fundamentally new metrics (e.g. engaged time, video starts) without rearchitecting the entire system.

- Room in the model for adding new dimensions (e.g. URL-grouping campaign parameters, new data channels) without re-architecting the system or re-indexing production data.

Soft requirements that acted as a guiding light:

- Backend codebase should be much smaller.

- There should be fewer production data storage engines — ideally, one or two.

- System should be easier to reason about; the distributed cluster topology should fit on a single slide.

- Our frontend team should feel much more user interface innovation is possible atop the new query language for real-time and historical data.

And with that, we charged ahead!

The First Prototype

First prototypes are where engineering hubris is at its height. This is because we can invent various scenarios that allow us to verify our own dreams. It is hard to be the cold scientist who proves the experiment a failure in the name of truth. Instead, we are emotionally tied up in our inventions, and want them to succeed.

Put another way:

The first 10 minutes of "Inception" — where they are 3 layers deep in their own dreams, looking for the "secret" idea that will solve everything, while the dreamscape collapses around them as they crash into reality — is kinda how software product management feels.

— Andrew Montalenti (@amontalenti) February 20, 2019

But sometimes, merely by believing we shall succeed, we can fashion a bespoke instrument of innovation that causes us to succeed.

In the First Prototype of this project, I wanted to prove two things:

- That we could have a simpler codebase.

- That the state-of-the-art in open source technology had moved far enough along to benefit us toward our concrete System Two goals.

To start things off, I created a little project for Cassandra and Elasticsearch experiments, which we code-named “casterisk”. I went as deep as I could to teach myself these two technologies. As part of the prototyping process, we also shared what we learned in technical deep-dive posts on Cassandra, Lucene, and Elasticsearch.

The First Prototype had data shaped like our data, but it wasn’t quite our data. It generated random but reasonable-looking customer traffic in a stream, and using the new tools available to me, I managed to restructure the data in myriad ways. Technically speaking, I now had Cassandra CQL tables representing log-structured data that could be scaled horizontally, and Elasticsearch indices representing aggregate records that could be queried across time and also scaled horizontally. The prototype was starting to look like a plausible system.

But then, it took about 3 months — from May to August — for the prototype to go from “R&D” to “pre-prod” stage. We then published a post detailing all the new metrics supported in our new “beta” backend system. But why did it take us so long, given the early advancements?

Recruiting a Team

You would think as CTO of a startup that I don’t need to recruit a team for an innovative new project. I certainly thought that. But I was wrong.

You see, the status quo is a powerful drug. Upon the first reports of my experiments, my team met me with suspicion and doubt. This was not due to any fault of their own. Smart engineers should be skeptical of prototypes and proposed re-architectures. Only when prototypes survive the harshest and most skeptical scrutiny can they blossom into production systems.

Building Our Own Bike

Steve Jobs once famously said that computers are like a “bicycle for the mind”, because it’s a technology that lets us “move faster” than any of other species’ naturally-endowed speed.

Well, the creaky bike that seemed to be slowing us down in our usage of Apache Storm was an open source module called petrel. Now long unmaintained, at the time, it was the only way to run Python code on Apache Storm, which was how we reliably ran massively parallel real-time streaming jobs, overcoming Python’s global interpreter lock (GIL) and handling multi-node scale-out.

So, we built our own bike: streamparse. I discussed streamparse a bit at PyCon US, but the upshot is that it lets us run massively parallel stream processing jobs in pure Python atop Apache Storm. And it let us prototype those distributed stream processing clusters very, very quickly.

But though bikes let you move fast, if you build your own bike, you have to factor in how long it takes to build it — that is, while you’re standing still. And that’s exactly what we did for a few months.

This may have been a bit of scope creep. After all, we didn’t need streamparse to test out our new casterisk system. But it sure made testing them a whole lot easier. It let us run local tests of the clusters and it let us deploy multiple topologies in parallel that tweaked different settings. But it meant a new investment was required that was not the same as the core problem at hand.

Upgrading The Gears… While We Rode

The other bit of hubris that slowed us down: the alluring draw of “upgrades”.

Elasticsearch had just added its aggregation framework, which was exactly what we needed to do time series analysis against its records. It had also just added a new aggregate, cardinality, that we thought could satisfy some important use cases for us. Cassandra had a somewhat-buggy counter implementation in 2.0, but a complete re-implementation was around the corner in 2.1. We thought upgrading to it would save us, but then, we discovered counters were a bad idea altogether. Likewise, Storm had a stable release that we were already running in 0.8, but 0.9.2 was around the corner and was going to be the new stable. We upgraded to it, but then, discovered bugs in its network layer that stopped things from working. Our DevOps team reasonably pushed for a new “stable” Ubuntu version. We adopted it, thinking it’d be safe and stable. Turned out, we hit kernel/driver incompatibility problems with Xen, which were only triggered due to the scale of our bandwidth requirements.

So, all in all, we did several “upgrades to stable” that were actually bleeding edge upgrades in disguise. All while we were testing the systems in question with our own new Second System. The upgrades felt like “adopting a stable version”, but they were simply too new. If you upgrade the gears while riding the bike, you should expect to fall. This was one of the core lessons learned.

Taking a Couple of Fun Detours

The project seemed like it had already been distracted a bit by streamparse development and new database versions, but now a couple of fun detours also emerged from the noise. These were “good” detours. For example: we built an “engaged time” experiment that showcased our ability to track and measure a brand new metric, which had a very different shape from page views and visitors. We proved we could measure the metric effectively with our new system, and report on it in the context of our other metrics. It turns out, this metric was a driving force for adoption of our product in later months and years.

Our “referrer” experiment showed that we’d be able to satisfy several important queries in the eventually-delivered full system. Namely, we could breakout every traffic source category, domain, and URL in full detail, both in real-time and over long historical periods. This made our traffic source analytics more powerful than anything else on the market.

Our visits and sessions experiment showed our ability to do distinct counts just as well (albeit more slowly) than integer counts. Our “new vs returning” visit classifier had not just one, but two, rewrites atop different data structures, before eventually succumbing to a third rewrite that removed some functionality altogether. The funny thing is, these two attempts were eventually thrown away as we replaced it with a much simpler solution (called client-side sessionization, where we establish sessions in the JavaScript tracker rather than on the server). But, it was still a “good” detour, because it resulted in us shipping total visitors, new visitors, and returning visitors as real-time and historical metrics in our core dashboard — something that our competitors have still failed to deliver, years later.

These detours all had the feeling of engineers innovating at their best, but also meant multi-month delays in the delivery of an end-to-end working system.

Arriving at the Destination

Despite all this, in early August, we called a huddle to say we were finally going to be done with our biking tour. It was a new bike, it was fast, its gears were upgraded, and it was running as smoothly as it was going to. This led to the October, November, December period, which was among our team’s most productive during the Second System period. “Parse.ly Preview” was built, tested, and delivered, as the data proved its value and flexibility.

We ran the new system side-by-side with the old system. This was hard to do, but an absolute hard requirement for reducing risk. That’s another lesson learned: something we definitely did right, and that any future rewrites should consider to be a hard requirement, as well.

The new system was iteratively refined, while also making the backend more stable and performant. We updated our blog post on Mage: The Magical Time Series Backend Behind Parse.ly Analytics to reflect the shipped reality of the working system. I presented our usage of Elasticsearch as a large-scale production time series engine at Elastic{on}, where I got to know some members of the Elastic team who had worked on the aggregation engine that we managed to abstract over in Mage. We cut our customers over to the new system, just as our old system was hitting its hard scaling limits. It felt great.

Several new features were launched atop it in the following years, including a new version of our API, a native iOS app, a new “homepage overlay” tool, new full-screen dashboards, new filters and new reports. We shipped campaign tracking, channel tracking, non-post tracking, video tracking — all of which would have been impossible in the old system.

We’ve continued to ship feature after feature atop “casterisk” and “mage” since then. We expanded the scope of Parse.ly toward tracking more than just articles, including videos, landing pages, and other content types. We now support advanced custom segmentation of audience by subscription status, loyalty level, geographic region, and so on. We support custom events that are delivered through to a hosted data pipeline, which customers can use for raw data analysis and auditing. In other words, atop this rewritten backend, our product just kept getting better and better.

Meanwhile, we have kept up with 100% annual growth in monthly data capture rate, along with a 1000x growth in historical data volume. All thanks to our team’s engineering ingenuity, thanks to our willingness to pop open the hood and modify the engine, and thanks to the magic of linear hardware scaling.

Brooks was right

Brooks was right to say, in his typically gendered way, that “the second system is the most dangerous one a man ever designs”.

Building and shipping that Second System in the context of a startup evolving its “MVP” into “production system” has its own challenges. But though backend rewrites are hard and painful, sometimes the price of progress is to rethink everything.

Having Brooks in mind while you do so ensures that when you redesign your bike, you truly end up with a lighter, faster, better bike — and not an unstable unicycle that can never ride, or an overweight airplane that can never fly.

Doing this wasn’t easy. But, watching this production system grow over the last few years to support over 300 enterprise customers in their daily work, to serve as a base for cutting-edge natural language processing technology, to answer the toughest questions of content attribution — has been the most satisfying professional experiment of my life. There’s still so much more to do.

So, now that we’ve nailed value and scale, what’s next? My bet’s on scaling this value to the entire web.

3 thoughts on “Shipping the Second System”