I’m glad to say that the last few months have been a return to the world of day-to-day coding and software craftsmanship for me.

To give a taste of what I’ve been working on, I’m going to take you on a tour through some damn good Python software I’ve been using day-to-day lately.

Python 3 and subprocess.run()

I have a long relationship with Python, and a lot of trust in the Python community. Python 3 continues to impress me with useful ergonomic improvements that come up in real-world day-to-day programming.

One such improvement is in the subprocess module in Python’s standard library. Sure, this module can can show its age — it was originally written over 20 years ago. But as someone who has been doing a lot of work at the level of UNIX processes lately, I’ve been enjoying how much it can abstract away, especially on Linux systems.

Here’s some code that calls the UNIX command exiftool to strip away EXIF metadata from an image, while suppressing stdout and stderr via redirection to /dev/null.

# stripexif.py import sys from subprocess import run, DEVNULL, CalledProcessError assert len(sys.argv) == 2, "Missing argument" filename = sys.argv[1] print(f"Stripping exif metadata from {filename}...") try: cmd = ["exiftool", "-all=", "-overwrite_original", filename] run(cmd, stdout=DEVNULL, stderr=DEVNULL, check=True) except CalledProcessError as ex: print(f"exiftool returned error:n{ex}") print(f"try this command: {' '.join(cmd)}") print(f"to retry w/ file: {filename}") sys.exit(1) print("Done.") sys.exit(0) |

If you invoke python3 stripexif.py myfile.jpg, you’ll get exif metadata stripped from that file, so long as exiftool is installed on your system.

The .run() function was added in Python 3.5, as a way to more conveniently use subprocess.Popen for simple command executions like this. Note that due to check=True, if exiftool returns a non-zero exit code, it will raise a CalledProcessError exception. That’s why I then catch that exception to offer the user a way to debug or retry the command.

If you want even more detailed usage instructions for subprocess, skip the official docs and instead jump right to the Python Module of the Week (PyMOTW) subprocess article. It was updated in 2018 (well after Python 3’s improvements) and includes many more detailed examples than the official docs.1

Settling on make and make help

I’ve been using a Makefile to automate the tooling for a couple of Python command-line tools I’ve been working on lately.

First, I add this little one-liner to get a make help subcommand. So, starting with a Makefile like this:

all: compile lint format # all tools compile: # compile requirements with uv uv pip compile requirements.in >requirements.txt PYFILES = main.py lint: # ruff check tool ruff check $(PYFILES) format: # ruff format tool ruff format $(PYFILES) |

We can add the help target one-liner, and get make help output like this:

❯ make help all: all tools compile: compile requirements with uv format: ruff format tool help: show help for each of the Makefile recipes lint: ruff check tool |

I know all about the make alternatives out there in the open source world, including, for example, just with its justfile, and task with its Taskfile. But make, despite its quirks, is everywhere, and it works. It has staying power. Clearly: it has been around (and widely used) for decades. Is it perfect? No. But no software is, really.

With the simple make help one-liner above, the number one problem I had with make — that is, there is usually no quick way to see what make targets are available in a given Makefile — is solved with a single line of boilerplate.

My Makefile brings us to some more rather good Python software that entered my world recently: uv, ruff check and ruff format. Let’s talk about each of these in turn.

Upgrading pip and pip-tools to uv

uv might just make you love Python packaging again. You can read more about uv here, but I’m going to discuss the narrow role it has started to play in my projects.

I have a post from a couple years back entitled, “How Python programmers can uncontroversially approach build, dependency, and packaging tooling”. In that post, I suggest the following “minimalist” Python dependency management tools:

I still consider pyenv and pyenv-virtualenv to be godsends, and I use them to manage my local Python versions and to create virtualenvs for my various Python projects.

But, I can now heartily recommend replacing pip-tools with uv (via invocations like uv pip compile). What’s more, I also recommend using uv to build your own “throwaway” virtualenvs for development, using uv venv and uv install. For example, here are a couple of make targets I use to build a local development environment venv, called “devenv,” using some extra requirements. In my case — and I don’t think I’m alone here — local development often involves rather complex requirements like jupyter for the ipython REPL and jupyter notebook browser app.

TEMP_FILE := $(shell mktemp) compile-dev: requirements.in requirements-dev.in # compile requirements for dev venv cat requirements.in requirements-dev.in >$(TEMP_FILE) && uv pip compile $(TEMP_FILE) >requirements-dev.txt && sed -i "s,$(TEMP_FILE),requirements-dev.txt,g" requirements-dev.txt .PHONY: devenv SHELL := /bin/bash devenv: compile-dev # make a development environment venv (in ./devenv) uv venv devenv VIRTUAL_ENV="${PWD}/devenv" uv pip install -r requirements-dev.txt @echo "Done." @echo @echo "Activate devenv:" @echo " source devenv/bin/activate" @echo @echo "Then, run ipython:" @echo " ipython" |

This lets me keep my requirements for my Python project clean even while having access to a “bulkier,” but throwaway, virtualenv folder, which is named ./devenv.

Since uv is such a fast dependency resolver and has such a clever Python package caching strategy, it can build these throwaway virtualenvs in mere seconds. And if I want to try out a new dependency, I can throw them in requirements-dev.in, build a new venv, try it out, and simply blow the folder away if I decide against the library. (I try out, and then decide against, a lot of libraries. See also “Dependency rejection”.)

I also use uv as a drop-in replacement for pip-compile from pip-tools. Thus this target, which we’ve already seen above:

compile: # compile requirements with uv uv pip compile requirements.in >requirements.txt |

I’ll commit both requirements.in and requirements.txt to source control. In requirements.in there is just a simple listing of dependencies, with optional version pinning. Whereas in requirements.txt, there is something akin to a “lockfile” for this project’s dependencies.

You can also speed up the install of requirements.txt into the pyenv-virtualenv using the uv pip install drop-in replacement, that is, via uv pip install -r requirements.txt.

There are also some pip-tools style conveniences in uv, such as:

uv pip sync, to “sync” a requirements.txt file into your current venvuv pip list, to get a table of dependencies in the current venvuv pip freeze, to get “lockfile-style” or “frozen-dependencies-style” printout, with dependencies pinned to exact version numbers

By the way, you might wonder how to install uv in your local development environment. It likes to be installed globally, similar to pyenv, thus I suggest their curl-based one-line standalone installer, found in the uv project README.md.

Update from August 2024: It looks like uv continues to grow in popularity, especially with their 0.3.x and 0.4.x releases. Read more at their update blog post here, uv: Unified Python Packaging. It looks like uv is expanding to take on some of the use cases covered by rye and pyenv. Thus their use of the word “unified.” I think that’s very encouraging and I still heartily recommend uv as part of your new “Good Python Software” stack.

Upgrading pyflake8 to ruff check

In that same article on Python tooling, and also in my style guide on Python, I mention using flake8. Well, ruff is a new linter for Python, written by the same team as uv, whose goal is not only to be a faster linter than PyLint, but also to bundle linting rules to replace tools like flake8 and isort. The recent 0.4.0 release blog post discusses how the already-fast ruff linter became 2x faster. It’s thus too fast (and comprehensive) to ignore, at this point.

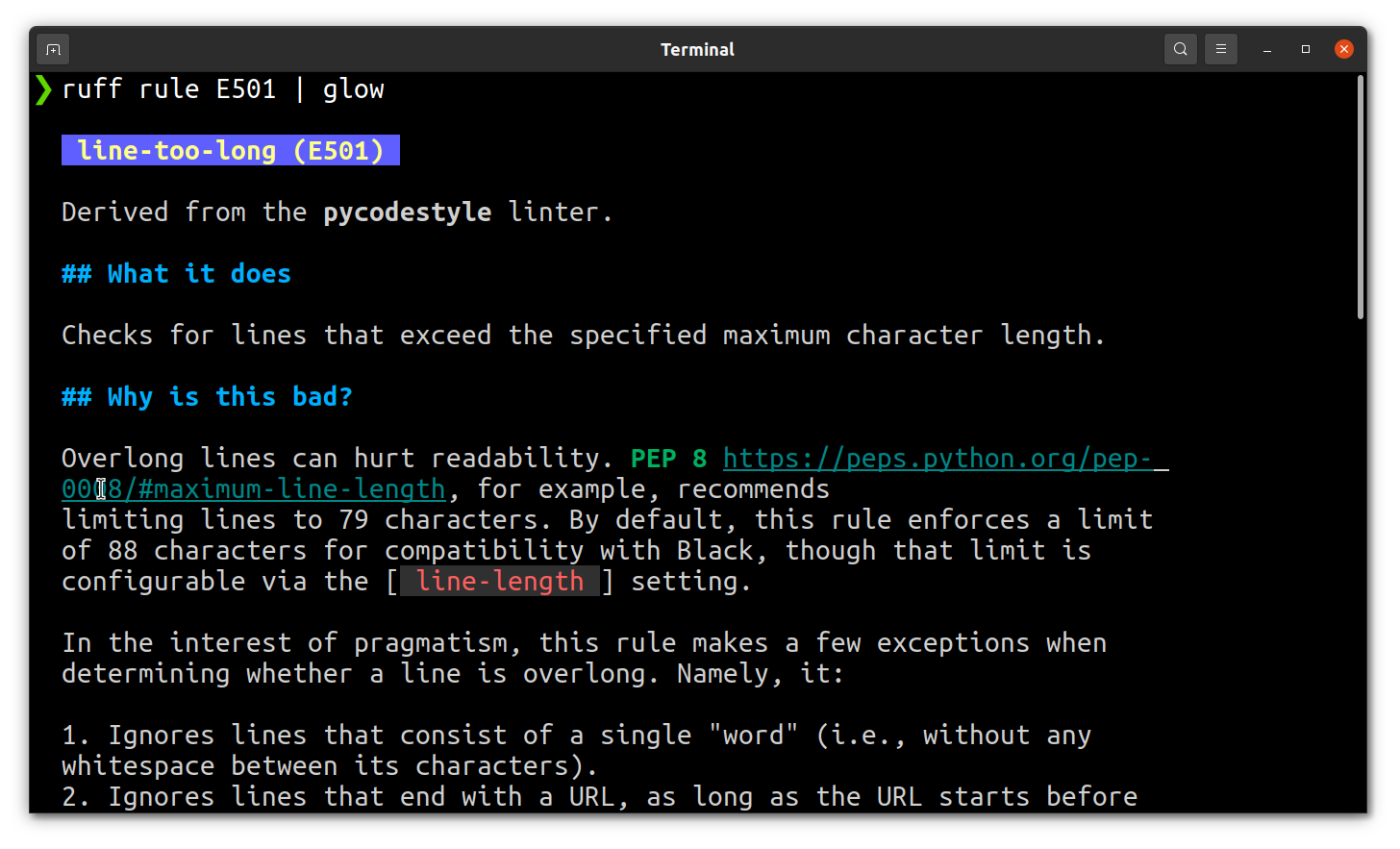

Since I have long loved the idea of making a developer’s life easier by coupling great command-line tools with comprehensive web-based and open documentation, ruff check has one huge advantage over other linters. Each time it finds a problem with your code, you’re given a code with the linting rule your code triggered. Usually the fix is simple enough, but sometimes you’ll question why the rule exists, and whether you should suppress that rule for your project. Thankfully, this is easy to research in one of two ways. First, there are public “ruff rule pages,” more than 800 and counting. You can find these via Google or by searching the ruff docs site. For example, here is their explainer for line-too-long (E501).

But you can also ask ruff itself for an explanation, e.g. ruff rule E501 will explain that same rule with command-line output. The tl;dr is that it is triggered when you have a line longer than a certain number of characters in your code, and ruff does some exceptions for convenience and pragmatism. Since this command outputs Markdown text, I like to pipe its output to the glow terminal Markdown renderer thusly:

There’s a lot to love about ruff check, in terms of finding small bugs in your code before runtime, and alerting you to style issues. But when combined with ruff format, it becomes a real powerhouse.

Upgrading black to ruff format

The Python code formatting tool black deserves all the credit for settling a lot of pointless debates on Python programmer teams and moving Python teams in the direction of automatic code formatting. Its name is a tongue-in-cheek reference to the aphorism often attributed to Henry Ford, who supposedly said that customers of his then-new Model T car (originally released in 1908!) could have it in whatever color they wanted — “as long as that color is black.” I think the message you’re supposed to take away is this: sometimes too much choice is a bad thing, or, at least, beside the point. And that is certainly true for Python code formatting in the common case.

As the announcement blog post described it, the ruff format command took its inspiration from black, even though it is a clean house formatter implementation. To quote: “Ruff’s formatter is designed as a drop-in replacement for Black. On Black-formatted Python projects, Ruff achieves >99.9% compatibility with Black, as measured by changed lines.”

There are some nice advantages to having the linter and formatter share a common codebase. The biggest is probably that the ruff team can expose both as a single Language Server Protocol (LSP) compatible server. That means it’s possible to use ruff’s linting and formatting rules as a “unified language backend” for code editors like VSCode and Neovim. This is still a bit experimental, although I hear good things about the ruff plugin for VSCode. Though vim is my personal #1 editor, VSCode is my #2 editor… and VSCode seems to be every new programmer’s #1 editor, so that level of support is comforting.

My personal Zen arises from a good UNIX shell and a good Python REPL

I’m not one to bike shed, yak shave, or go on a hero’s journey upgrading my dotfiles. I like to just sit at my laptop and get some work done. But, taking a little time a few weeks back — during Recurse Center and its Never Graduate Week (NGW 2024) event, incidentally — to achieve Zen in my Python tooling has paid immediate dividends (in terms of code output, programming ergonomics, and sheer joy) in the weeks afterwards.

My next stop for my current project is pytest via this rather nice-looking pytest book. I already use pytest and pytest-cov a little, at the suggestion of a friend, but I can tell that pytest will unlock another little level of Python productivity for me.

As for a REPL… the best REPL for Python remains the beloved ipython project, as well as its sister project for when you want a full-blown browser-based code notebook environment, Jupyter. As I mentioned, I use that make devenv target (which itself uses uv) to create the venv for these tools, and then run them out of there.

I’m still not missing autocomplete for Python code in my vim editor because… what’s the point? When I can run a full Python interpreter or kernel and get not just function and method and docstring introspection, but also direct inspection of my live Python objects at runtime? That’s the good stuff. This is how I’ve been getting my brain into that sweet programming flow-state, making some jazz between my ideas and the standard library functions, Python objects, Linux system calls, and data structure designs that fit my problem domain in just the right way.

Appendix: More Good Software

There are some other UNIX tools I’m going to mention briefly, just as a way of saying, “these tools kick ass, and I love that they exist, and that they are so well-maintained.” These are:

restic— encrypt’d, dedupe’d, snapshot’d backups2rclone— UNIX-y access to S3, GCS, Backblaze B2vim— the trusty editor, continues never to fail me; RIP Bram Moolenaartmux— as someone recently stated, tmux is worse is betterzsh— together withpowerlevel10kandoh-my-zsh, a pure delight

Other things I might discuss in a future blog post:

- why I stick with Ubuntu LTS on my own hardware

- my local baremetal server with a Raspberry Pi

sshbastion and a ZeroTier mesh network - why I stick with DigitalOcean for WordPress and OpenVPN

- why I really enjoy Opalstack, the Centos-based shared and managed Linux host, for hobby Django apps and nginx hosting

Are you noticing a common theme? … whispers “Linux.”

The way I’ve been bringing joy back to my life is a focus on Linux and UNIX as the kitchen of my software chef practice. Linux is on my desktop, and on my baremetal server; running in my DigitalOcean VM, and in my Raspberry Pi; in my containers, or in my nix-shell, or in my AWS/GCP public cloud nodes.

The truth is, Linux is everywhere in production. It might as well be everywhere in development, too.

Sorry: you’ll only be able to pry Linux from my cold, dead hands!

I hope you find your Zen soon, too, fellow Pythonistas.

Footnotes

- Doug Hellmann is a treasure for maintaining such an excellent website of Python module information, by the way. Take a look at his page on the

fileinputmodule for another nice example of this kind of documentation. ↩ - A year after this blog post was published, I wrote a long post about my love of

resticandrclone— it includes a detailed explainer on how I use them for my backups of my root filesystem,$HOMEfolder, and photography SSD drive. Read about that in “Linux backup workflow for hackers with restic, rclone, Backblaze B2”. The use of Backblaze B2 is to get offsite cloud storage of those backups.↩

Yes! Come to the dark side.